NES Emulator in GLSL

In 2020, I implemented an accurate emulation of the MOS 6502 microprocessor entirely in GLSL. Combined with my own implementation of the NES' Pixel Processing Unit (a predecessor to modern GPUs), I emulated the console's titles in real time fully on GPU. Tackling this complex task in an unusual way put my graphics knowledge to the test.

For this project, I hijacked a standard OpenGL graphics pipeline to perform general computation without compute shaders being available. I also accurately recreated the console's rendering capabilities.

Some time later, I came back to the project looking to optimize it to meet a 60fps performance target. By moving most of the computational load to the vertex shader, I was able to perform data transfers with optimized packed structures that removed most of the redundant processing across fragments. This improved performance massively, allowing for full-speed emulation.

OpenGL

I am able to work with low-level APIs to set up my own rendering pipelines without relying on already built general-purpose engines.

This code snippet comes from my response during an exam I took in my bachelor's, in which we were tasked with creating a program fitting a set of requirements within a one-hour limit, from scratch and without any external resources.

Vulkan

Recently, I have been learning to use the more modern Vulkan API. I feel that my knowledge of general graphics concepts, as well as the OpenGL API, has been making getting used to this new environment much easier. While it is true that its interface is quite verbose, I am excited about the level of control it enables, especially since it leaves little room for undefined behavior since most operations are explicit. I am also glad for the inclusion of SPIR-V as an intermediate step for shader compilation, taking into account how much of a headache inconsistencies across hardware vendors have been for me already.

Modding a Custom Engine Renderer

Working for a project within Minecraft, I ran into limitations caused by its rendering engine. To achieve the results that I wanted, I resorted to dynamic code injections via Mixins. This way, I could implement new features on top of the already existing renderer, which is written in Java using OpenGL.

First of all, I created a context manager utility for loading shaders and handling exceptions that arose in the process. This was very useful as a development tool as it allowed me to more comfortably iterate integrating shaders.

I also gave myself the ability to inject arbitrary data-driven uniforms into both core and postprocessing shaders. In the game's engine, these two systems are separate and, while they share a lot of code, expose quite a few inconsistencies. I smoothed over some of these inconveniences with my implementation.

Another problem I found with the vanilla renderer is that the depth buffer often was overwritten by inconsequential render passes and could not be used appropriately during postprocessing. To fix the issue, I rearranged the way framebuffers are bound during rendering.

For this use case, a lot of the game logic would be happening server side on my custom backend. Many resources such as shaders would be streamed to the clients dynamically. For this reason, it was desirable to be able to control the renderer from the server-side, which would also minimize the amount of updates we would have to push to the client during development. As such, I implemented some networking to be able to control aspects of the rendering remotely.

With my extended version of the engine, I was able to implement many effects that would have been impossible otherwise. The clips below showcase remotely data driven animations, uses of the improved custom posprocessing, as well as many added renderers (used, for instance, for HUDs).

Shader context manager

Postprocess uniform injection

Depth buffer preservation

Network controlled shaders

2D Game Rendering

While I consider my 3D work more interesting, I also have experience working with 2D rendering.

For this project I was required to build a 2D game engine from scratch using PyGame, an SDL/OpenGL wrapper for Python. Due to the overhead caused by the choice of language, optimizations were crucial to achieve real-time execution.

Our game required scenes to be built dynamically from many small tiles during runtime. This seemed problematic given the cost of managing PyGame sprite objects. To address the performance concerns, I came up with two key optimizations:

- Managed terrain data in continuous buffers, using draw calls during a generation step to fill the buffer and then a single call per frame to handle all the terrain at once.

- Provided a new implementation for PyGame's sprite groups capable of handling scrolling via transformations without the need to iterate through sprites.

General Processing on GPU

My previous lines of research have focused on High Performance Computing applied to scientific applications and Machine Learning. To perform parallel computations at a massive scale, I've made use of GPGPU APIs such as CUDA and OpenCL.

This Website!

My portfolio website uses WebGL to render animated visuals on the background via shaders.

Producing code that works on different kinds of devices — such as GPUs from multiple manufacturers or mobile platforms — is a very valuable skill when working on graphics.

Despite JavaScript not being my expertise, my knowledge of OpenGL in C/C++/Java allows me to adapt and learn similar workflows more quickly.

Conjuring Brings Trouble

Conjuring Brings Trouble is an action RPG with roguelite elements developed within Minecraft (custom engine built with OpenGL, these visual effects are implemented in GLSL). It was made as a birthday gift in a timespan of a bit over a week. I took part in design, programming, and graphics. The project was developed under a strict deadline, so scoping and finding possible organizational and design issues early was crucial to keep the workload reasonable.

We wanted to deliver a strong visual identity catering to our target player specifically. For this reason we chose a striking style with strong contrast and characteristic elements themed around sorcery. The experience aimed for visual and playable cohesion, presenting gameplay elements through these effects. Integrating visual elements into the design from the beginning allowed us to make better use of their potential. Below is a sample of markers used to guide players to distant objectives. I also developed a voxel-based system so that our level designers could define areas in which skyboxes, fog effects, etc. would be triggered. Rendering effects in real time allows for great versatility, such as in the animation to the right, which achieves smooth transitions through the use of SDFs.

Raymarching

I sometimes make use of the raymarching technique to render objects based on their Signed Distance Fields. This allows me to sample the scene via a series of rays that I step along depending on the distance to the closest element on the scene in the previous iteration. This is a simple yet powerful method, capable of rendering complex objects based on mathematical definitions instead of vertices and triangles, which enables rendering fractals, smooth transitioning between models, outlines, etc.

This example makes use of raymarching to render a 3D cube onto a skybox. The glowing effect surrounding the geometry is achieved nearly free of cost by taking the minimum distance the ray has passed next to the cube and displacing it with some noise. Normals can easily be computed for lighting from the 3D gradient of the SDF. In this case, I was looking for a pixelated look, which I got by projecting the skybox geometry into a box, quantizing based on the 2D face coordinates, and reprojecting into a sphere.

Custom Canvas Rendering

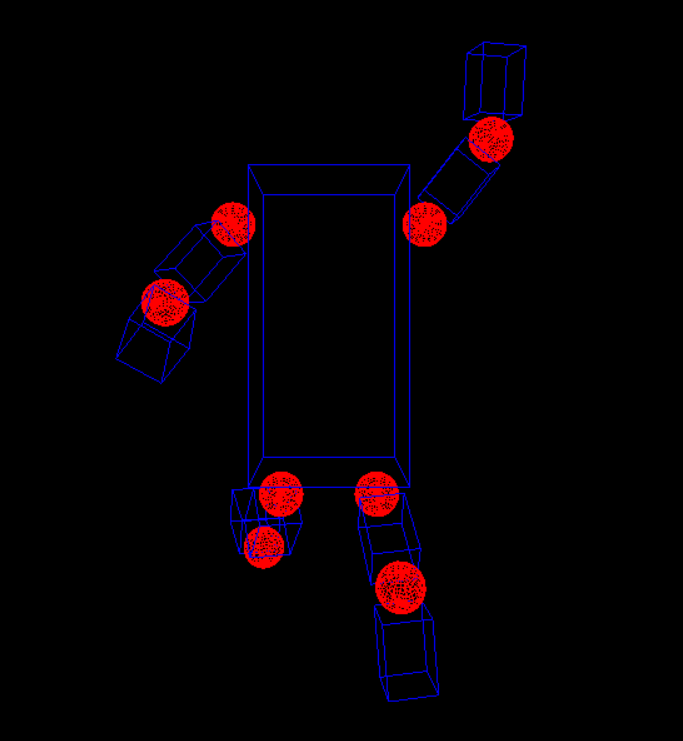

Capture Kings 2 is a PvP capture the point game within Minecraft. It features a skill system, which we wanted to showcase as part of its tutorial. For visual appeal, we decided to add in a screen with some animations featuring the player's custom character.

However, our control over the game's visuals is very limited without modifying its executable. Dynamically rendering player models under these limitations proved to be an interesting engineering challenge.

First, I created software to capture vertex data (positions, lighting, etc.) in a way that would also allow replaying gameplay in order to record matching footage (for backdrops, VFX, other subjects), as well as an alpha mask for player models.

This data was then compressed and coded into a vertex shader that would find the current frame, extract the data, and pass it to the fragment shader. The fragment shader would then draw the model applying perspective transformations.

Untold Stories 8

Untold Stories 8: Burnout Fantasy is an action survival experience within Minecraft. My role was to produce visual effects using an experimental technique I developed to hijack the game's transparency rendering. Since most players had never seen this in use before, we included a check to verify that the system was working as part of our title screen.

To control the system from the server-side, I created an instance of a specific particle system in front of the player that would get rendered into a different buffer than other effects, and then combined with the scene by the transparency pass. In this step, I could instead get color and position information from the particle to communicate with the GPU.

This allowed us to implement custom postprocessing shaders and produce stylized visuals to match the world's aesthetics depending on the gameplay area. The examples below showcase a "retro" effect (color quantization, scanline effect, and a mild screen distortion) and an area obscured by fog (making use of the depth buffer to compute not only fog but also atmospheric turbulence). Balancing style and playability was crucial during this project.

Crimson Bay's Treasure

For this project, I was asked to reproduce the visuals of a piece of concept art by Egor Poskryakov as a 3D environment. The idea was to have a title screen matching the view from the reference, and then have players smoothly transition into gameplay within that scenery.

Part of achieving this effect was having 3D models matching the customized player characters standing in same position as the subject was in the original artwork. The game's engine didn't allow me to create player models, but through the use of vertex shaders I was able to displace geometry in just the right way to achieve the desired effect.

My workflow for this element involved implementing a script in the 3D modeling software Blender to be able to define poses within the 3D scene and export them into a custom optimized data structure to embed within the vertex shader. This allowed me to adjust the model placements easily without having to manually nudge each triangle into place.

Custom Rendering Pipelines in Unity

I also have experience working within general purpose game engines such as Unity. While my expertise is in lower level implementations, making use of the engine's features for technical artists unlocks some very interesting workflows.

Since the addition of scriptable render pipelines in 2018, it's been possible to tweak the way the engine produces visuals in a more meticulous way. I have used this flexibility to implement, for instance, multipass rendering, which I used to create an inverse hull outline effect through a second pass over geometry.

For the Game Maker's Toolkit 2024 Game Jam, my team worked on a small 3D adventure themed around wizardry. To sell the impact of magic, I worked on visual effects making use of Unity's shader and VFX graphs. These tools allow me to iterate quickly on visuals, which makes it more convenient to integrate them with gameplay.

Multipass render feature

Render pass

Massive Parallelism

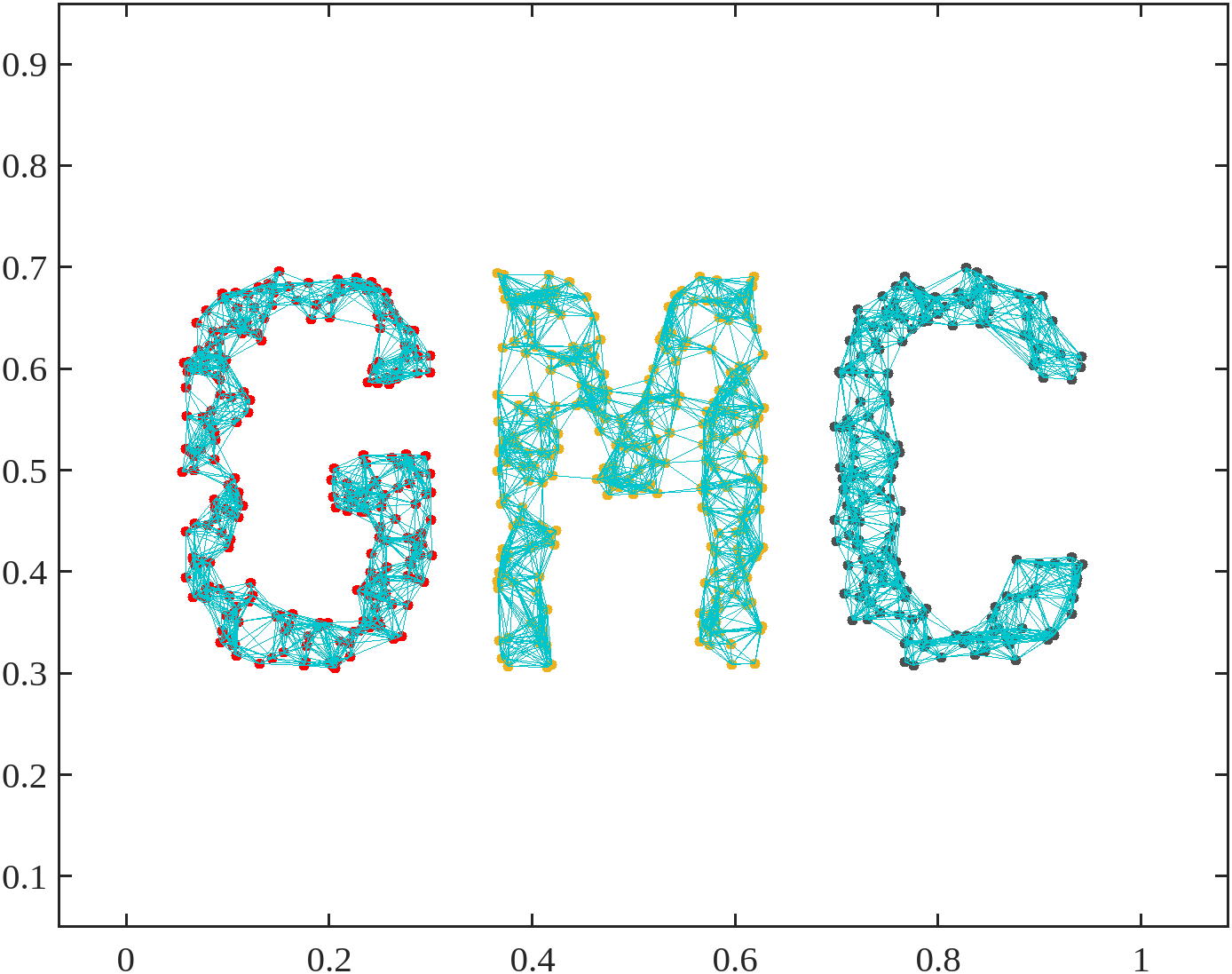

My bachelor's thesis consisted on designing and implementing a parallel algorithm for multi-view data clustering within High Performance Computing environments making use of graph theory.

I chose to implement the algorithm in C, as was fitting for low-level high-throughput data management. I made use of numerous optimization techniques, focusing on multithreading and multiprocessing. For this, I made use of OpenMP and MPI.

The resulting algorithm ran over 3600 times faster than the state of the art in a modestly-sized cluster. This work earned me a special distinction, a perfect score, and the award to the best thesis of my faculty.

Competitive Programming

For a few years, I took up competitive programming as a way to improve my problem solving, algorithmics, and teamwork. C++ was my language of choice during the competitions, which has allowed me to become acquainted with language features and improve my general proficiency.

During this time, I became the top performer at a regional level in Galicia, with my team winning the inter-university regional competition two years in a row. We were invited to the Spanish nationals, where we also performed among the top teams in the country.

The code produced during these events is rushed and unfit for professional projects, but it still serves as good practice for highly performant algorithms: Code samples on GitHub

Plugin Loading in Breath of the Wild

I love tinkering with game internals, and as a result have worked on mods for quite a few titles. A few years back I got interested in performing code edits on the Nintendo Switch release of The Legend of Zelda: Breath of the Wild. While modding for the Wii U release was relatively well understood at the time, loading code on the switch version — and on real hardware — proved more difficult.

At this point, the community had partially ported over a set of development tools that were originally designed for Super Smash Brothers Ultimate. However, the key component I needed, running custom code on hooks via plugins, was missing. As such, I decided to reimplement it myself taking the Smash version as a reference.

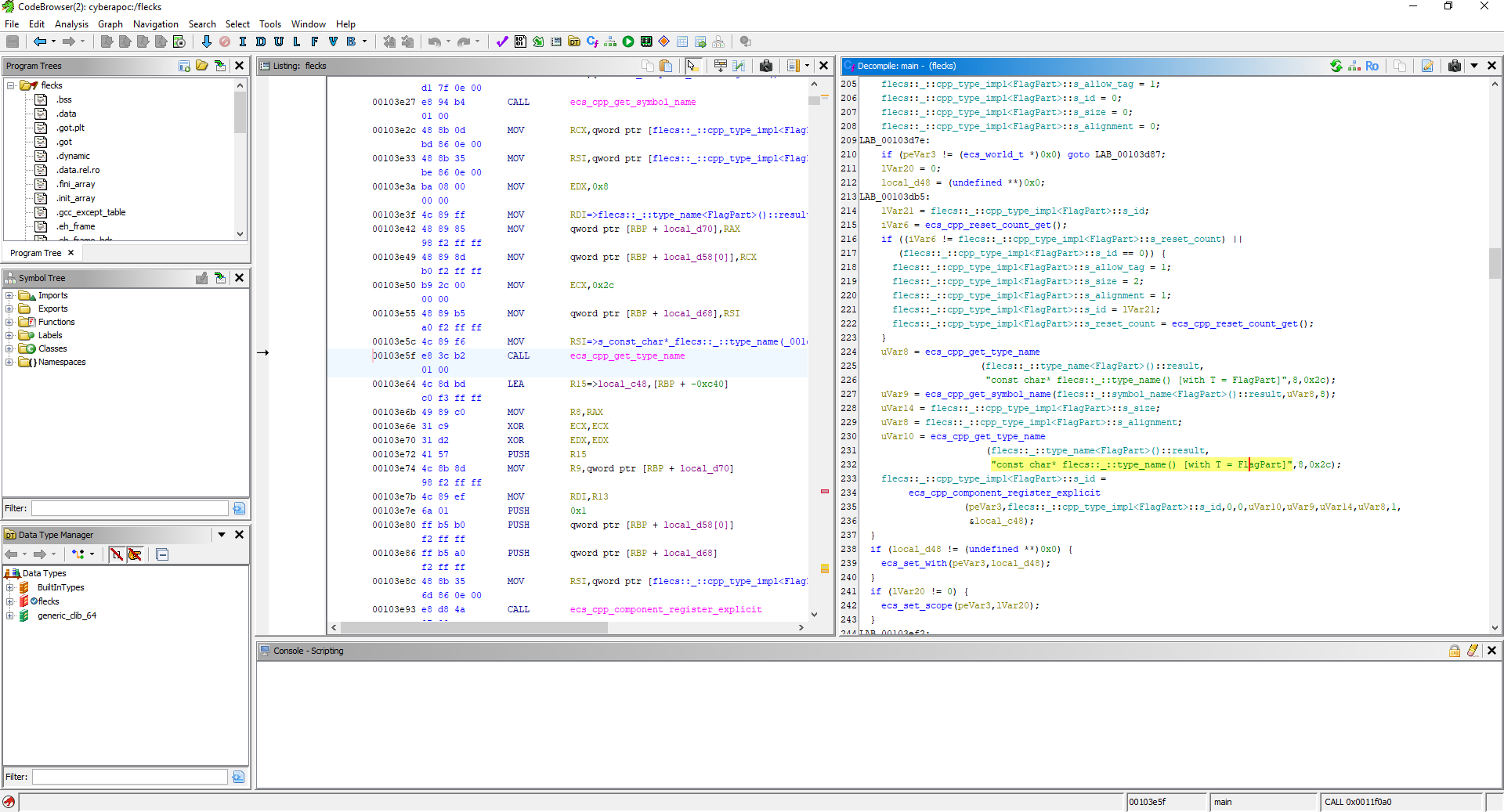

Binary Reverse Engineering

In my interest for understanding and modifying others' code, I have learned to analyze compiled binaries without sources or debugging symbols. This unusual skill has earned me awards at multiple hacking competitions, including first place in the Galician universities' C3TF and 19th place in one of the biggest global competitions, NahamConCTF.

The example on the right showcases a challenge with an executable containing a game engine written in C++, making use of the open source ECS FLECS. I was able to understand the machine code logic and retrieve the data I needed.

Preprocessing Data for Games

At the start of 2021, our development team noticed the URLs where user-uploaded images were located in Mojang's servers corresponded to their SHA256 hashes. By controlling these hashes, it should be possible to transmit arbitrary data to clients consuming the API. This would allow web servers to communicate with applications written to run within Minecraft, something that was thought to be completely impossible.

We started work on developing the fastest way to compute all the hashes we would need. At first, the estimated runtime was over 2 years. However, after numerous optimizations, we were able to generate an extremely compressed database weighing in at 8GB, with the information corresponding to 235 individual images in a couple of hours, which allowed us to reliably transmit 32 bits of information per hash. Once the computation was complete, subsequent queries to this database were instantaneous, allowing us to use them in a game service.

Game Modding

Over the years I've dived into the code of many games to try and make my own tweaks. This has taught me a lot about how to properly work with low level game code written by others.

This snippet corresponds to a Lua script to be loaded in a multiplayer-adapted version of Half Life 2, running on Valve's Source engine. During the multiplayer adaptation process, the player physics were altered from those of the base game. Wanting to experiment, I wrote this script that reimplemented behavior closely matching the original.

Working with Large Codebases

I have lots of experience joining big projects, with already well established development practices. I am capable of understanding large pieces of code written by others and making significant changes to improve functionality while preserving existing behavior.

During my time working at Sngular, I was part of a project focusing on automated code generation based on web API definitions (OpenAPI, AsyncAPI). I was tasked with extending generation to be able to handle nested type definitions. Due to the existing typing system lacking versatility, I decided to reimplement it from the ground up with support for recursion. My solution handled the complex data structures we were targeting, and preserved all the functionality stakeholders relied upon, as verified by our TDD methodology.

Game Engine Structure

In the past, I have built simple game engines from scratch building with libraries such as SDL, LWJGL, and PyGame. For one of my university courses, I needed to team up with other students to create a game with PyGame (an SDL and OpenGL wrapper for Python). Due to my previous experience working with games, I directed the project, providing guidance on the best approaches to implement different aspects of the engine given our resources and needs.

We needed to load and store various kinds of game resources in memory, making sure they were efficiently accessible when needed and didn't waste memory space (for instance with multiple copies of an identical resource). To achieve this we implemented a resource manager that associated string IDs convenient for development to the resources and allowed them to be loaded when required and stored in hashmaps.

A specific case of a resource that would potentially take up a lot of space was music. We wanted to manage it in an efficient way as to minimize loading times and ensure smooth playback. For this reason we built a sound controller that would stream music from the sound files and use threads to manage that process without interrupting other components.

Another requirement for our game was storing player progress in a persistent way. To do it, we created data structures to model the game state in a serializable way, so that data could then be stored to disk.

We also implemented a system to manage player input. This manager could be used globally to easily set both polling and event-based triggers and allowed us to easily rebind controls during gameplay, abstracting the details away into a self-contained package.

The game was structured in abstract objects we defined as "scenes". We built this system in a versatile way, with a director that takes care of the main game loop managing scenes in a stack structure. The scene at the top of the stack is active, and game logic is delegated to it. The scene then ticks the entities it contains, which in turn tick their components in a hierarchical structure. A big advantage of this open-ended definition for scenes is that multiple scenes can be combined in powerful ways. A scene may be pushed onto the stack to create a GUI that pauses gameplay, like a pause or upgrade menu. A scene may contain a reference to another and make use of its properties.

An example of these capabilities in action is our multithreaded loading system, that makes use of a transition scene that gets pushed as a wrapper surrounding the scene we want to load.

As I mentioned, each scene may contain a number of entities, each of which defines what it needs to run during the game loop, render on the screen, etc. We built our simple ECS in a hierarchical structure. For instance, the player entity inherits from "living entity", a kind of entity that manages health points and damage. Living entities are, in turn "kinematic entities", affected by physics. Kinematic entities derive from the base entity, which defines nearly no behavior besides memory management and acts as a blank slate to build upon. The player entity also manages components for its controls, audio, graphics, abilities... This structure allowed us to expand content minimizing the amount of code we needed to write to implement new behaviors.

A key component for gameplay was generating terrain procedurally. To achieve this, I designed an algorithm that divided space into a grid that was explored as a graph, with the search being guided by evaluating simplex gradient noise over the 2D space. This approach allowed me to define a base generator, with multiple kinds of generators inhering from it, leading to different types of terrain. Coordinate transformations, additional checks on the noise, and rules for sprite placement could all be overridden leading to many possibilities for generation.

Resource manager

Sound controller

Game model

Control system

Scenes: director

Scenes: multithreaded loading

Entity

Terrain generation

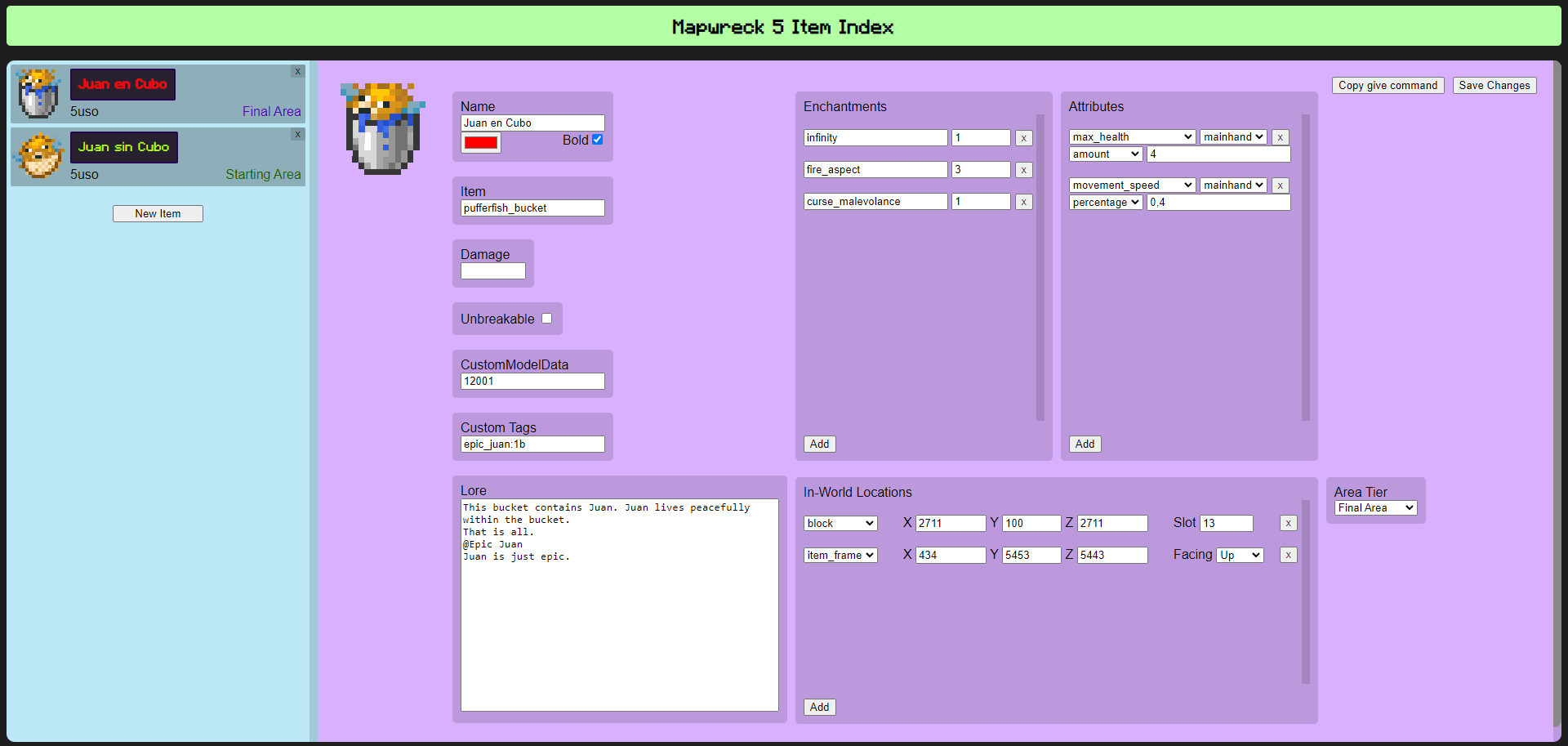

Managing Concurrent Work for Large Teams

In August 2022, I was put in charge of directing a project with the aim of producing an action RPG experience between 50 creators within a one-week time limit. One of the challenges we faced is we wanted to provide players with loot items distributed across the map, and needed a standard way to generate and balance them, with members of the project being able to push updates on the fly.

My solution was throwing together a tool we could self-host on GCP. In it, contributors could manage loot data and distribution, seeing the changes other users made in real time. It featured an easy-to-use interface, tracked changes, and serialized data into a machine-readable format so that it could be automatically integrated ingame. This allowed us to coordinate smoothly during the fast development process, producing cohesive and balanced loot across the game even with a large team making simultaneous changes.

Getting Projects Back on Track

Divinity's End is an action RPG experience developed within Minecraft lasting about 30 hours. My role was that of project leader, designer, and programmer. I was in charge of coordinating an international team of 25 creators, also managing the version control and quality assurance processes.

The project had been stalled for years, and I was brought on board in order to finally get it back on track. We achieved this goal within a few months of work, hitting our target release date window. I also coordinated the release process and handled post-launch updates. In the end, the map received glowing reviews, going on to become one of the best valued by the community, and has been played over 40,000 times.